Finite Sample Error Bounds for Ordinal Embedding

Presenter

May 19, 2016

Keywords:

- ordinal embedding

Abstract

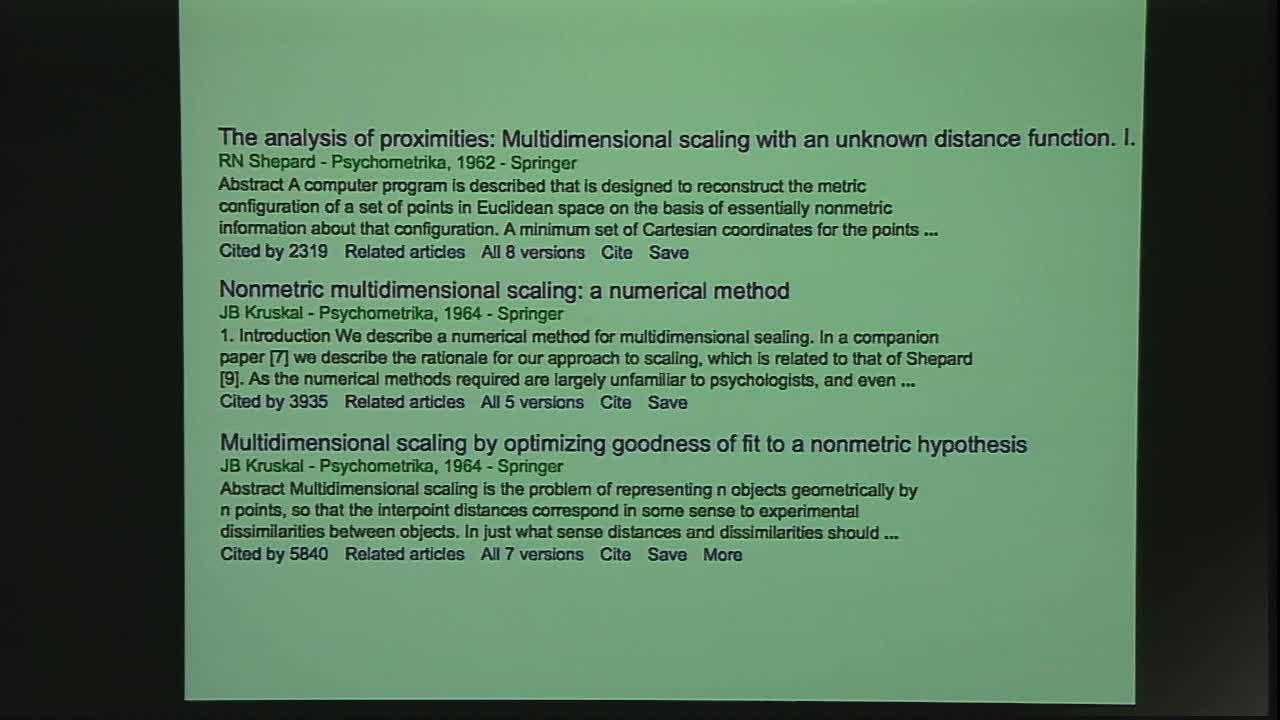

Ordinal embedding, also known as non-metric multidimensional scaling, aims to represent items as points in d-dimensional Euclidean space so that the distances between points agree as well as possible with a given set of ordinal comparisons such as item i is closer to item j than to item k. This classic problem is often used to visualize perceptual similarities. Recently, several authors have proposed a variety of algorithms for learning a metric embedding from ordinal information. There has also been some theoretical progress towards characterizing the consistency of ordinal embedding methods. For example, it has been shown that the correct embedding can be learned in limit as the number of items grows. However, a shortcoming of all prior work is the lack of prediction and embedding error bounds for problems involving a finite number of items. This talk addresses this problem, developing error bounds for ordinal embedding algorithms. The bounds explicitly show the dependence on the number of ordinal comparisons relative to the number of items and the dimension of the embedding.