When and How are (promise) Constraint Satisfaction Problems Efficiently Solvable?

Presenter

October 21, 2024

Abstract

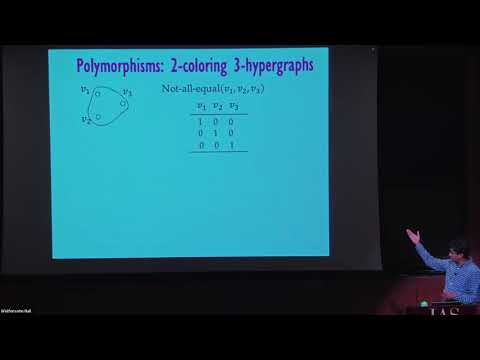

Computational problems exhibit a diverse range of behaviors in terms of how quickly and effectively they can be solved. What underlying mathematical structure (or lack thereof) in a computational problem leads to an efficient algorithm for solving it (or dictates its intractability)? Given the vast landscape of problems and algorithmic approaches, it would be simplistic to hope for a universal theory explaining the underpinnings of easiness/hardness. Yet, in the realm of constraint satisfaction problems (CSP), the algebraic dichotomy theorem gives a definitive answer: a polynomial time algorithm exists when there are non-trivial local operations called polymorphisms under which the solution space is closed; otherwise the problem is NP-complete. Emboldened by this, one might speculate a polymorphic principle in more general contexts, with appropriate notions of symmetries governing the existence of efficientalgorithms. Beginning with some background on CSP and the polymorphic approach to understand their complexity, the talk will discuss some extensions beyond CSP where the polymorphic principle seems promising (yet far from understood). In particular, we will discuss ``promise CSP'' where one is allowed to satisfy a relaxed version of the constraints, a framework that includes problems such as approximate graph coloring and discrepancy minimization. We will provide glimpses of the emerging theory characterizing the (in)tractability of interesting classes of promise CSP and the ability of natural classes of algorithms (linear and affine relaxations) to solve them, and highlight some of the many challenges that remain.