Abstract

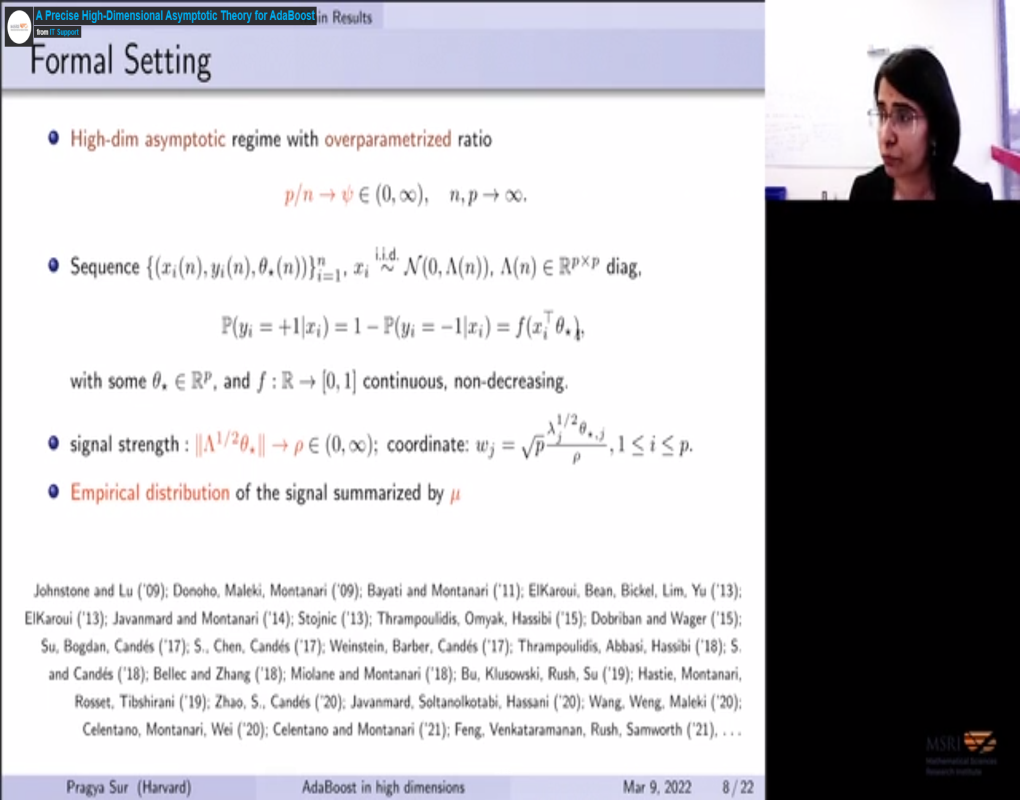

Ensemble learning algorithms represent a cornerstone of traditional statistical learning. Recent works on cross-study replicability (e.g. Patil and Parmigiani PNAS '18) demonstrate that ensembling single-study learners can significantly improve out-of-study generalization capabilities of learning algorithms. Despite these advances, sharp characterization of the generalization error of ensembling algorithms is often challenging, even in the single study setting. Motivated by these considerations, we conduct an in-depth study of the generalization performance of boosting algorithms, specifically AdaBoost, a canonical ensemble learning algorithm. For this talk, we will focus on the problem of classification in the context of a single observed training data that is both high-dimensional and (asymptotically) linearly separable. We utilize the classical connection of AdaBoost with min-$\ell_1$-norm interpolation (Zhang and Yu AOS '05), and under specific data-generating models, establish an asymptotically exact characterization of the generalization performance. This, in turn, improves upon existing upper bounds in our setting. As a byproduct, our result formalizes the following fact in the context of AdaBoost : overparametrization helps optimization. Our analysis is relatively general and has potential applications for other ensembling approaches. Time permitting, I will discuss some of these extensions. This is based on joint work with Tengyuan Liang.