Computational Complexity of Learning ReLUs

Presenter

April 15, 2021

Abstract

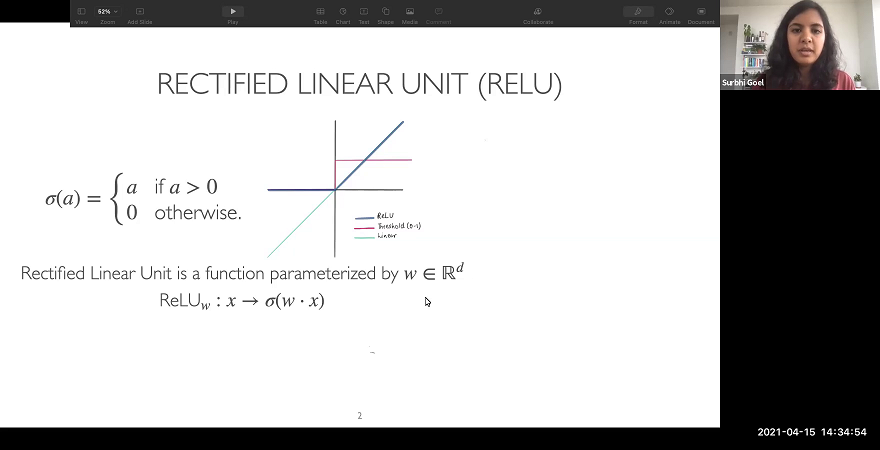

A major challenge in the theory of deep learning is to understand the computational complexity of learning basic families of neural networks (NNs). In fact, learning even a single Rectified Linear Unit (ReLU), which is one of the most commonly used activation functions in deep learning, can be challenging. The challenge here arises from the non-convexity of the associated optimization problem. In this talk, I will give an overview of hardness results for the problem of learning ReLUs with noisy labels under distribution independent as well as distribution dependent settings. I will subsequently describe positive results that nearly match these lower bounds. As part of the positive results, I will also describe a simple gradient-based algorithm which approximately learns a ReLU in polynomial time for any well-behaved distribution.