Latent Stochastic Differential Equations for Irregularly-Sampled Time Series

Presenter

April 30, 2020

Abstract

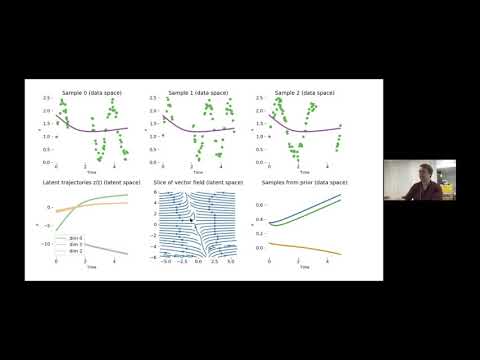

Much real-world data is sampled at irregular intervals, but most time series models require regularly-sampled data. Continuous-time models address this problem, but until now only deterministic (ODE) models or linear-Gaussian models were efficiently trainable with millions of parameters. We construct a scalable algorithm for computing gradients of samples from stochastic differential equations (SDEs), and for gradient-based stochastic variational inference in function space, all with the use of adaptive black-box SDE solvers. This allows us to fit a new family of richly-parameterized distributions over time series. We apply latent SDEs to motion capture data, and will also discuss prototypes of infinitely-deep Bayesian neural networks.