Polynomial-time tensor decompositions via sum-of-squares

Presenter

March 21, 2016

Keywords:

- Computer Science and Discrete Mathematics (CSDM)

Abstract

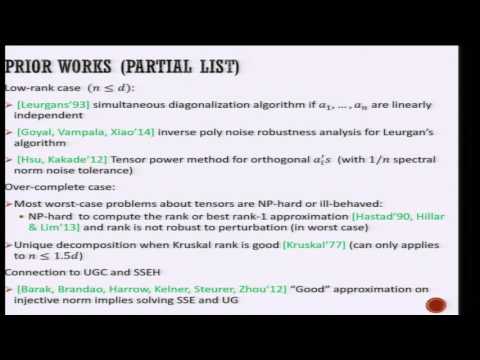

Tensor decompositions have been the key algorithmic components in provable learning of a wide range of hidden variable models such as topic models, Gaussian mixture models, independent component analysis, dictionary learning. Despite its success, one of the challenges in this area is to decompose over-complete 3rd-order tensors robustly. In most applications, it’s important to handle low order tensor with good noise tolerance to achieve small sample complexity, and over-complete tensors show up when the dimensionality of the latent representation is larger than the ambient dimension. In this talk I will present a polynomial time sum-of-squares algorithm that decomposes random 3rd-order tensor with rank at most $d^{1.5-\delta}$ where $d$ is the ambient dimension. Previously only quasi-polynomial time algorithms (Ge and Ma’15) were known to achieve these guarantees. A new ingredient in the analysis of our rounding algorithms is that we extracted a moment matrix with good spectral gap from solutions to sum-of-squares relaxations. To enable this analysis we augment sum-of-squares relaxations with certain maximum entropy constraints. This technique could potentially have applications elsewhere. Based on joint work with Jonathan Shi and David Steurer.