Team 6: Visual words: Text analysis concepts for computer vision

Presenter

August 5, 2009

Keywords:

- Computational issues

MSC:

- 65D19

Abstract

llustration of SIFT

features computed using VLFeat library

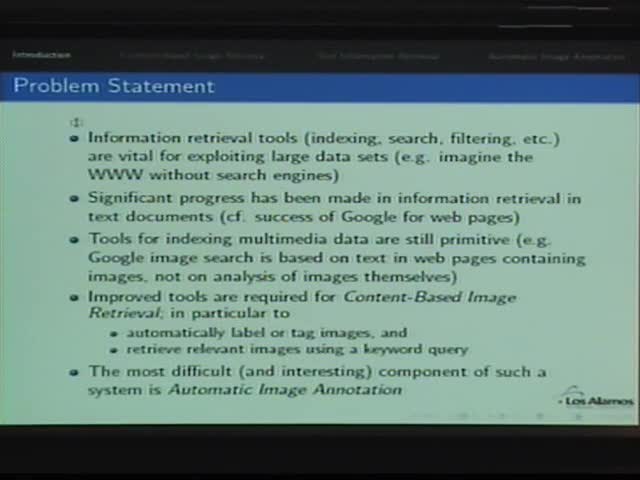

Project Description:

Large collections of image and video data are becoming

increasingly common in a diverse range of applications,

including

consumer multimedia (e.g. flickr and

YouTube), satellite

imaging,

video surveillance, and medical imaging. One of the most

significant

problems in exploiting such collections is in the retrieval of

useful

content, since the collections are often of sufficient size to

make a

manual search impossible. These problems are addressed in computer

vision research areas such as content-based image

retrieval, automatic image tagging, semantic video

indexing, and

object detection. A sample of the exciting work being done in

these

areas can be obtained by visiting the websites of leading

research

groups such as Caltech

Computational Vision, Carnegie

Mellon Advanced Multimedia Processing Lab, LEAR, MIT CSAIL Vision

Research, Oxford

Visual Geometry Group, and WILLOW.

One of the most promising ideas in this area is that of

visual

words, constructed by quantizing invariant image features

such as

those generated by SIFT.

These visual word representations allow text document analysis

techniques (Latent

Semantic Analysis, for example) to be applied to computer

vision problems, an interesting

example being the use of Probabilistic

Latent Semantic Analysis or Latent

Dirichlet allocation to learn to recognize categories of

objects

(e.g. car, person, tree) within an image, using a training set

which

is only labeled to indicate the object categories present in

each

image, with no indication of the location of the object in the

image. In this project we will explore the concept of visual

words,

understand their properties and relationship with text words,

and

consider interesting extensions and new applications.

References:

[1] Lowe, David G., Distinctive Image Features from

Scale-Invariant Keypoints,

International Journal of Computer Vision, vol. 60,

no. 2, pp. 91-110, 2004. doi: 10.1023/b:visi.0000029664.99615.94

[2] Leung, Thomas K. and Malik, Jitendra, Representing and

Recognizing the

Visual Appearance of Materials using Three-dimensional Textons,

International Journal of Computer Vision, vol. 43, no.

1, pp. 29-44, 2001. doi: 10.1023/a:1011126920638

[3] Liu, David and Chen, Tsuhan, DISCOV: A Framework for

Discovering

Objects in Video, IEEE Transactions on Multimedia,

vol. 10, no. 2, pp. 200-208, 2008. doi: 10.1109/tmm.2007.911781

[4] Fergus, Rob, Perona, Pietro and Zisserman, Andrew, Weakly

Supervised Scale-Invariant Learning of Models for Visual

Recognition,

International Journal of Computer Vision, vol. 71, no.

3, pp. 273-303, 2007. doi: 10.1007/s11263-006-8707-x

[5] Philbin, James, Chum, Ondřej, Isard, Michael, Sivic, Josef

and

Zisserman, Andrew, Object retrieval with large vocabularies and

fast

spatial matching, Proceedings of the IEEE Conference on

Computer Vision and Pattern Recognition, 2007. doi: 10.1109/CVPR.2007.383172

[6] Yang, Jun, Jiang, Yu-Gang, Hauptmann, Alexander G. and Ngo,

Chong-Wah,

Evaluating bag-of-visual-words representations in scene

classification, Proceedings of the international workshop

on multimedia information retrieval (MIR '07), pp.

197-206, 2007. doi: 10.1145/1290082.1290111

[7] Yuan, Junsong, Wu, Ying and Yang, Ming, Discovery of

Collocation

Patterns: from Visual Words to Visual Phrases, IEEE

Conference on Computer Vision and Pattern Recognition

(CVPR), pp. 1-8, 2007. doi: 10.1109/cvpr.2007.383222

[8] Fergus, Rob, Fei-Fei, Li, Perona, Pietro and Zisserman,

Andrew,

Learning object categories from Google's image search, IEEE

International Conference on Computer Vision (ICCV), vol.

2, pp. 1816-1823, 2005. doi: 10.1109/iccv.2005.142

[9] Quelhas, P., Monay, F., Odobez, J.-M., Gatica-Perez, D.,

Tuytelaars,

T. and Van Gool, L., Modeling scenes with local descriptors and

latent

aspects, IEEE International Conference on Computer Vision

(ICCV), pp. 883-890, 2005. doi: 10.1109/iccv.2005.152

[10] Sivic, Josef, Russell, Bryan C., Efros, Alexei A,

Zisserman, Andrew

and Freeman, William T., Discovering objects and their location

in

images, IEEE International Conference on Computer Vision

(ICCV), pp. 370-377, 2005. doi: 10.1109/iccv.2005.77

Prerequisites:

A strong computational background is essential, preferably with

significant experience in Matlab programming. (While experience

with

other programming languages such as C, C++, or Python may be

useful,

Matlab is likely to be the common language when individual team

member

contributions need to be integrated into a joint code.)

Some background in areas such as image/signal processing,

optimization theory, or statistical inference would be highly

beneficial.