Asynchronous Optimization in Networks: Novel Algorithms and Improved Performance Bounds

Presenter

October 21, 2015

Keywords:

- asynchronous Optimization, Novel Algorithms, synchronization

MSC:

- 94B50

Abstract

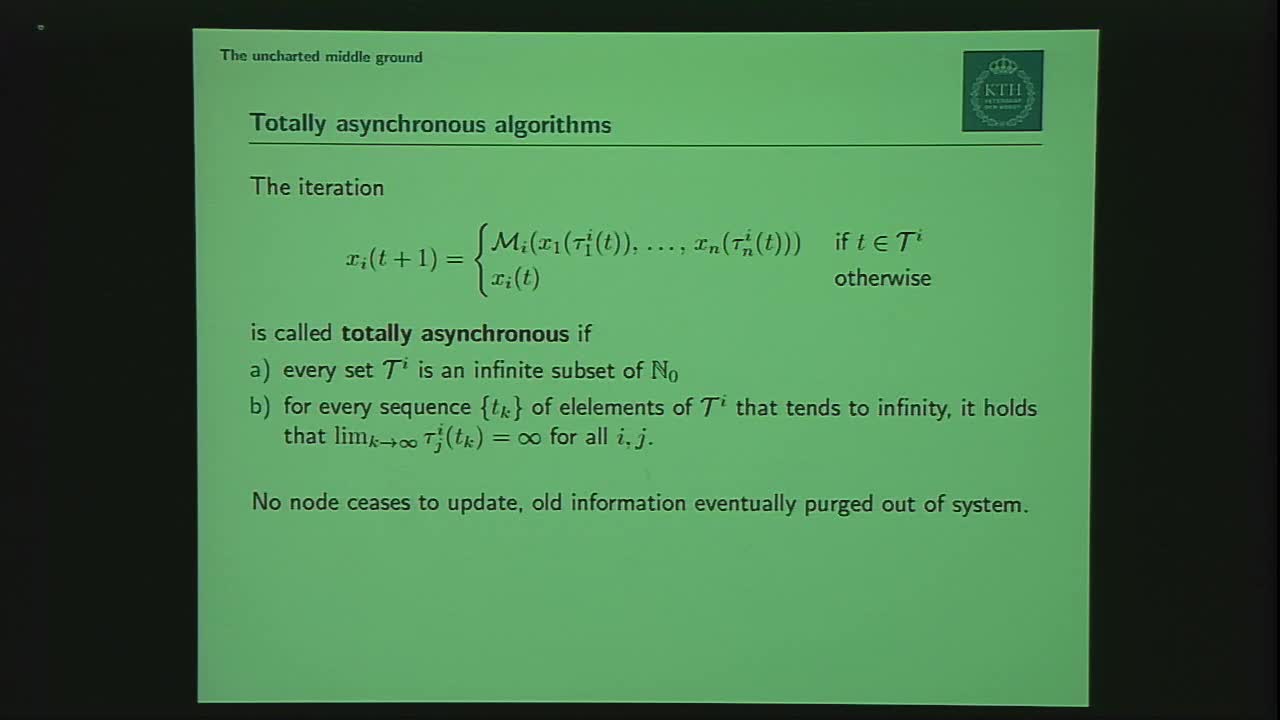

The recent surge in interest in large-scale machine learning and multi-agent coordination has created a demand for distributed optimization algorithms that can be run in a network of cooperating nodes. It is often advantageous to run these algorithms asynchronously, since this simplifies the implementation and eliminates the overhead associated with synchronization. While the effect of asynchronous updates have been well investigated in the literature, general tools for quantifying the impact of time-varying information delays are still limited.

This talk will explore distributed optimization algorithms that combine incremental gradient and coordinate descent methods to deal with problems that involve both a large number of loss functions and a huge number of decision variables. A few simple but powerful results for quantifying the impact on time-varying delays on iterative processes will be introduced. These results will be used to establish delay-independent stability of the proposed methods, and to analyze how to best balance the delay penalty of synchronization with the bias introduced by partial updates. Regimes where the proposed methods outperform alternatives will be highlighted. If time permits, we will discuss experiences from actual implementations on multi-processor architectures.