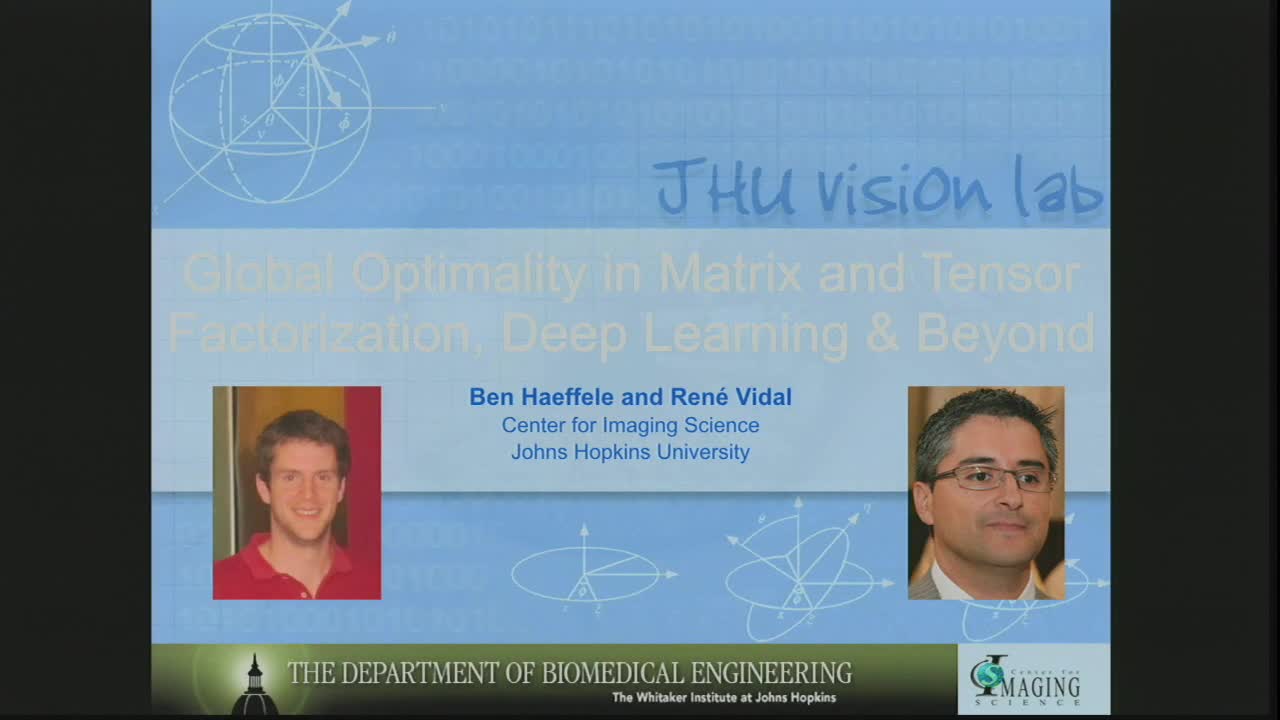

Global Optimality in Matrix and Tensor Factorization, Deep Learning, and Beyond

Presenter

January 27, 2016

Keywords:

- Non-convex optimization, sparse and low-rank matrix factorization, tensor factorization, deep learning

MSC:

- 90C26

Abstract

Matrix, tensor, and other factorization techniques are used in a wide range of applications and have enjoyed significant empirical success in many fields. However, common to a vast majority of these problems is the significant disadvantage that the associated optimization problems are typically non-convex due to a multilinear form or other convexity destroying transformation. Building on ideas from convex relaxations of matrix factorizations, in this talk I will present a very general framework which allows for the analysis of a wide range of non-convex factorization problems - including matrix factorization, tensor factorization, and deep neural network training formulations. In particular, I will present sufficient conditions under which a local minimum of the non-convex optimization problem is a global minimum and show that if the size of the factorized variables is large enough then from any initialization it is possible to find a global minimizer using a purely local descent algorithm. Our framework also provides a partial theoretical justification for the increasingly common use of Rectified Linear Units (ReLUs) in deep neural networks and offers guidance on deep network architectures and regularization strategies to facilitate efficient optimization. This is joint work with Ben Haeffele.