The Power Localization for Efficiently Learning with Noise

Presenter

February 27, 2015

Keywords:

- Algorithms

MSC:

- 65Y20

Abstract

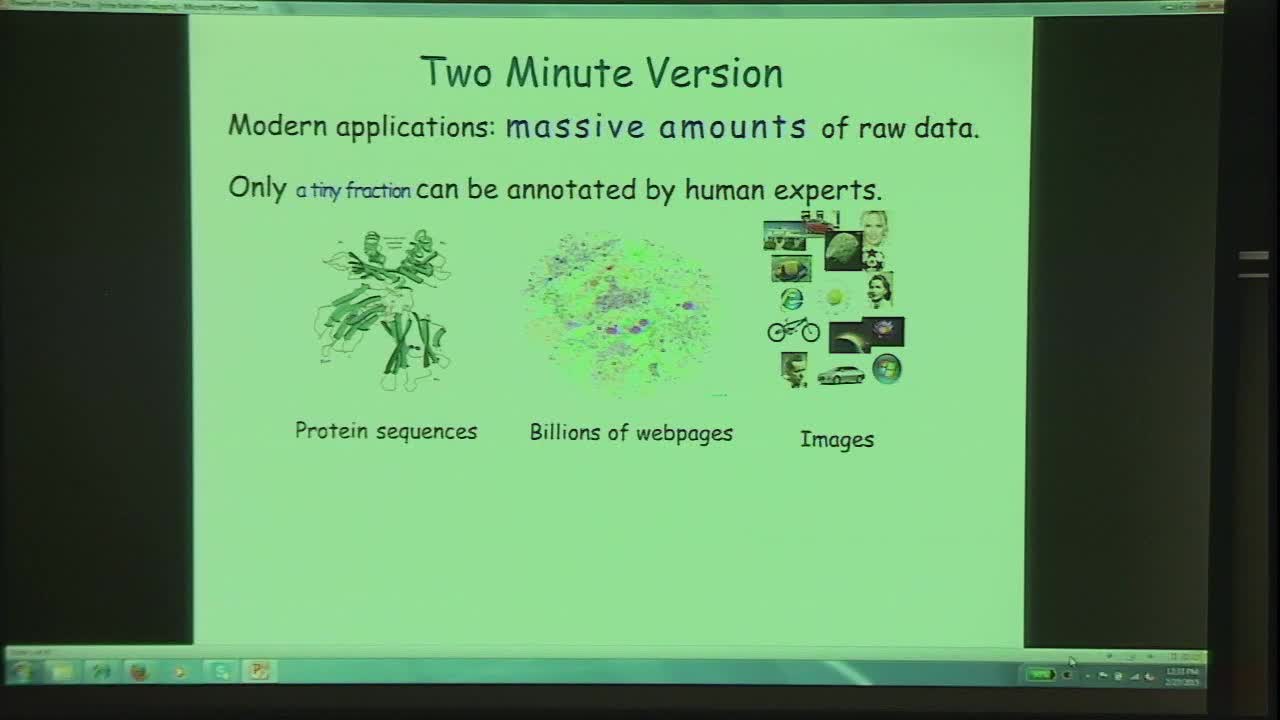

Active learning is an important modern learning paradigm where the

algorithm itself can ask for labels of carefully chosen examples from a

large pool of unannotated data with the goal of minimizing human labeling

effort. In this talk, I will present a computationally efficient, noise

tolerant, and label efficient active learning algorithm for learning

linear separators under log-concave and nearly log-concave distributions.

Our technique exploits localization in several ways and can be thought of

essentially solving an adaptively chosen sequence of convex optimization

(specifically hinge loss minimization) problems around smaller and smaller

bands around the current guess for the target.

Surprisingly, our algorithms not only have label complexity that is much

better than one can hope for in the classic passive learning scenario

(where all the examples are annotated/labeled), but they have much better

noise tolerance than previously known algorithms for this classic learning

paradigm.