Abstract

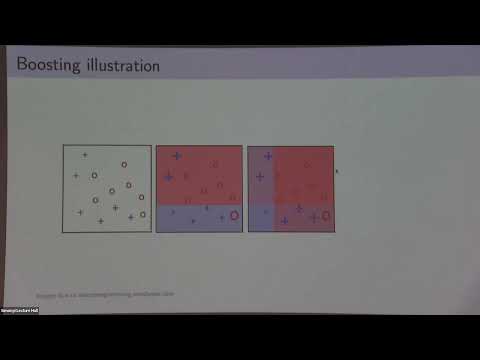

Boosting is a fundamental and widely used method in machine learning, which determines that weak learnability of binary functions implies strong learnability. Traditionally, boosting theory has primarily focused on symmetric 0-1 loss functions, aligning with classical PAC learning theory, from which boosting emerged. However, its extension to more general settings, such as multiclass classification and alternative loss notions, is not straightforward. In this talk, I will discuss recent works in which we develop a comprehensive theory of boosting for a broad class of cost-sensitive and multi-objective loss functions in both the binary and multiclass setting. By leveraging a game-theoretic perspective, we uncover a more nuanced understanding of the boosting landscape. In particular, we provide a taxonomy of learning guarantees, distinguishing those that are trivial (always achievable), boostable (implying strong learning), and intermediate (implying non-trivial yet not arbitrarily accurate learning). Finally, we extend these techniques to PAC learning itself, providing a fine-grained characterization of PAC learning and identifying the Pareto frontier of attainable guarantees for the class.