The Mathematical Sciences Institutes are comprised of six U.S.-based institutes that receive funding from the National Science Foundation (NSF), an independent U.S. government agency that supports research and education in all non-medical fields of science and engineering.

The math institutes aim to advance research in the mathematical sciences, increase the impact of the mathematical sciences in other disciplines, and expand the talent base engaged in mathematical research in the United States.

Opportunities for Participation

Institutes host a variety of programs and support participation from a broad range of the community. These include researchers from colleges and universities, as well as industry and government labs, both nationally and internationally. All Institute programs accept applications for participation. The backgrounds of participants range from undergraduate and graduate students, to postdoctoral researchers, to faculty, to people who work for non- and for-profit companies.

The specific offerings vary by Institute and may include:

- Semester-long research programs

- Week-long workshops, which may stand-alone or be part of a long-term program

- One- to two-year postdoctoral visiting positions

- Public lectures on current research, at a level suitable for the general public

- Undergraduate research experiences, typically during the Summer

- Interdisciplinary workshops involving collaboration between the mathematical sciences and the other sciences and engineering

- Programs for K-12 educators and K-12 students

- Targeted programs for underrepresented groups

- Crosscutting programs involving collaboration with the arts and humanities

- Summer schools for graduate students

- Professional/career development workshops

Research Highlights

Upcoming Events

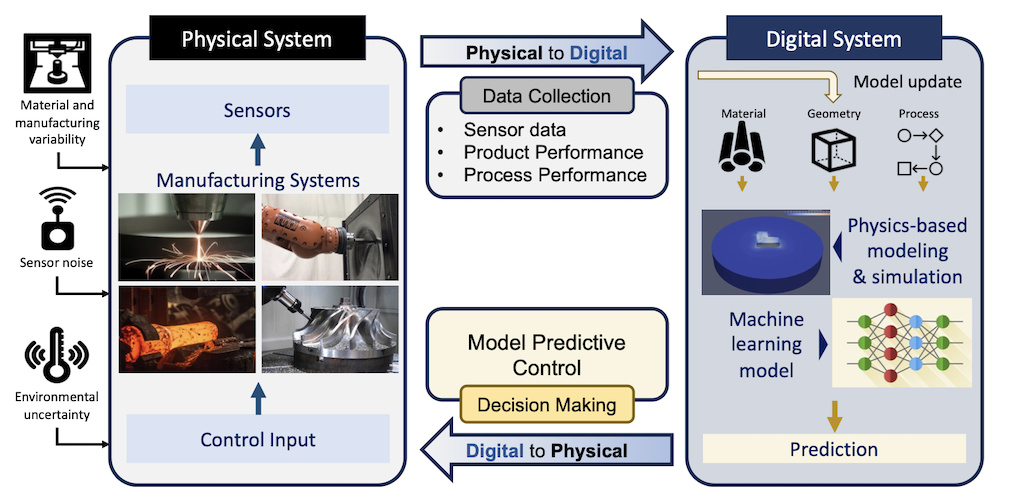

Formal scientific modeling: a case study in global health

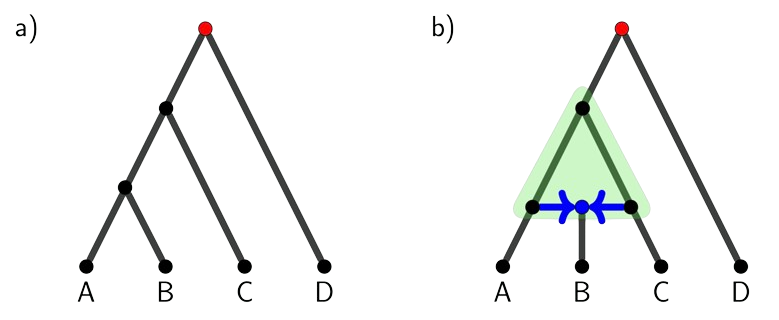

Special Year on Conformally Symplectic Dynamics and Geometry

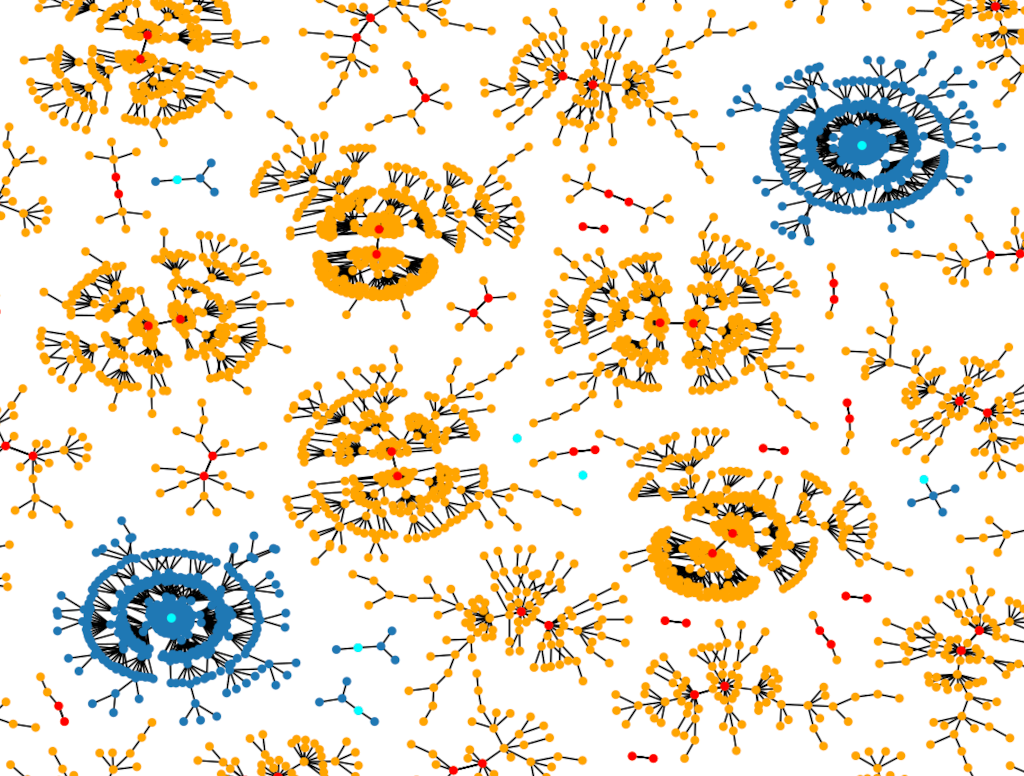

Nonparametric Bayesian Inference - Computational Issues