Introductory Workshop: Algorithms, Fairness, and Equity: "Pretrial Risk Assessment on the Ground- Lessons from New Mexico"

Presenters

August 28, 2023

Keywords:

- Algorithms

- Fairness

- mechanism design

- graphs and networks

- machine learning

- classification

- policy

- social choice

- computation

Abstract

Using data on 15,000 felony defendants who were released pretrial over a four-year period in Albuquerque, my collaborators and I audited a popular risk assessment algorithm, the Public Safety Assessment (PSA), for accuracy and fairness. But what happened afterward is even more interesting. Using the same data, we audited proposed legislation which would automatically detain large classes of defendants. By treating these laws as algorithms, and subjecting them to the same kind of scrutiny, we found that they are predictively inaccurate and would detain many people unnecessarily.

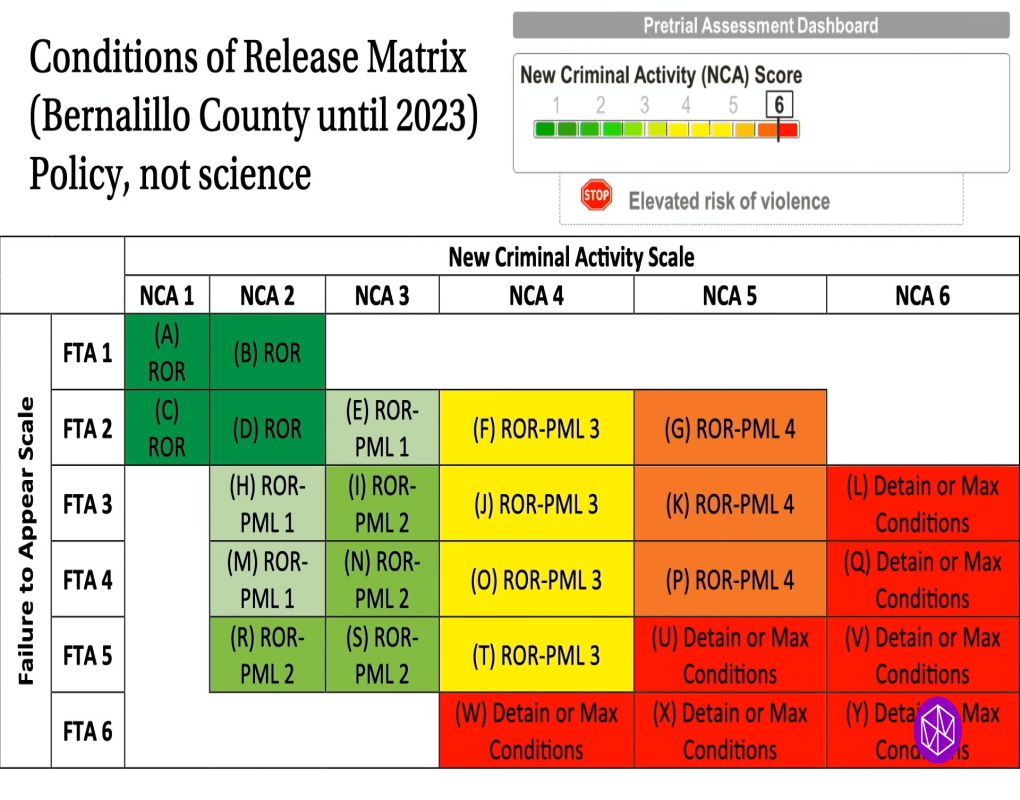

We then looked more closely at the data. Almost all studies of pretrial rearrest lump multiple types and severities of crimes together. By digging deeper, we found that rearrest for high-level felonies is very rare — about 0.1% and 1% for 1st and 2nd degree respectively. Most rearrests are for 4th degree felonies and about 1/3 are for misdemeanors or petty misdemeanors. We also found that most people with a "failure to appear" miss only one of their hearings, suggesting that they are candidates for supportive interventions rather than for detention. This is a good example of a domain where what we need is not better algorithms, but better data — and we need humans to understand what algorithms actually mean in terms of probabilities, rather than abstract scores like "6" or "orange."

Finally, I'll discuss how the debate around pretrial detention is playing out in practice. Unlike the 2016 ProPublica article, it's not about algorithms jailing people unfairly: it's the reverse, with prosecutors and politicians arguing that the PSA underestimates the risk of many dangerous defendants, and that they should be detained rather than released.