Johannes Blaschke - ExaFEL: Achieving real-time XFEL data analysis using Exascale Hardware

Presenter

May 4, 2023

Abstract

Recorded 04 May 2023. Johannes Blaschke of Lawrence Berkeley Laboratory presents "ExaFEL: Achieving real-time XFEL data analysis using Exascale Hardware" at IPAM's workshop for Complex Scientific Workflows at Extreme Computational Scales.

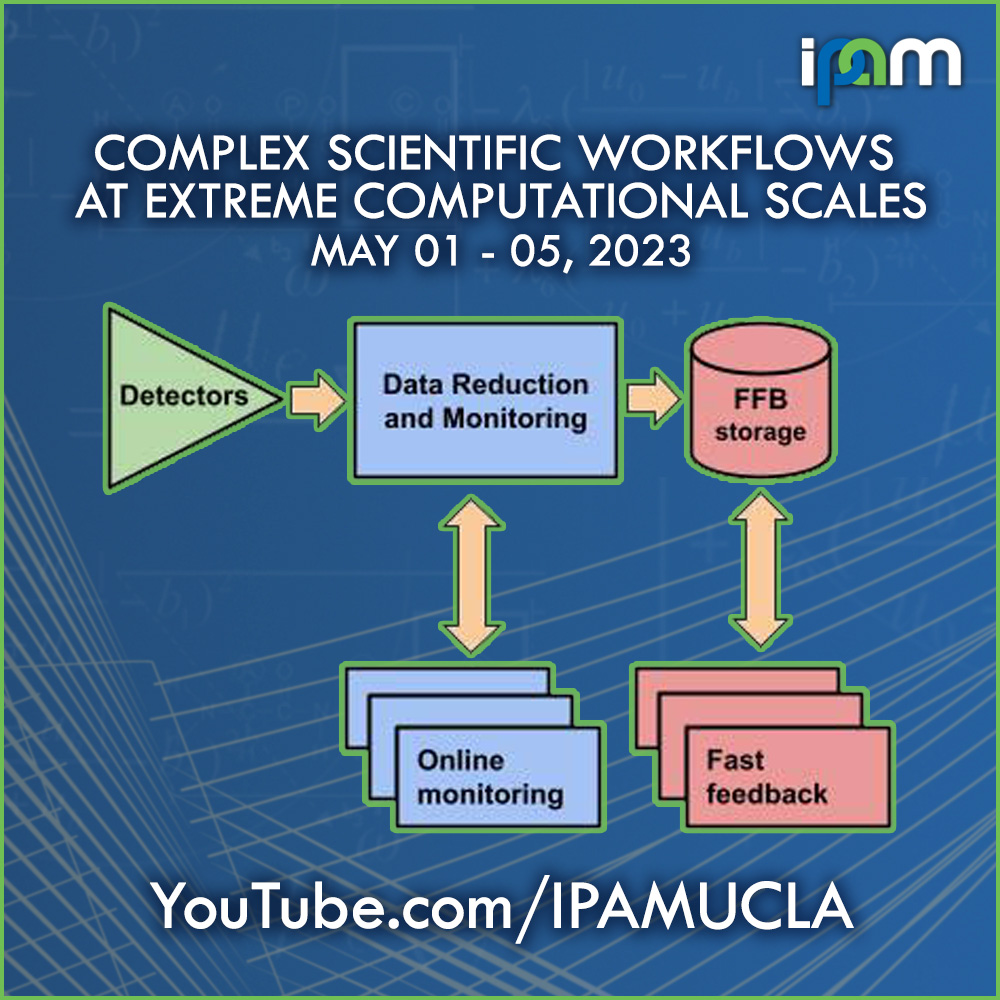

Abstract: With ever increasing data collection rates, large-scale experiments increasingly rely on high-performance computing (HPC) resources to process data (https://doi.org/10.1109/BigData52589.2021.9671421). This is a particularly difficult challenge when experimental operators require data processing in real time in order to steer experimental methodology. Often terabytes are collected per day, outstripping the local resources. One example

of such a workflow is real-time data processing at NERSC for data collected during X-ray Free Electron Laser (XFEL) experiments at the Linac Coherent Light Source (LCLS). Furthermore, live XFEL data processing cannot be completely scripted ahead of time, as the samples are usually unknown, and the experiments tend to be fairly hands-on. The challenge to HPC is clear: in order spend valuable beamtime wisely, data needs to be analyzed within minutes of it being collected at LCLS -- while accepting user input, and presenting results in a low-effort way.

Here we examine one such workflow (https://arxiv.org/abs/2106.11469) which analyzes data collected at LCLS in real time at NERSC. This workflow is scripted in Python (allowing users to rapidly make changes during an experiment), while offloading as much as possible of the computationally intensive components in C++ and Kokkos. A simple pipeline management engine is responsible for translating user inputs (such as new calibration constants) to Slurm job scripts.

We also examine the workflow performance data collected over two years of beam-times. Using the job turn-around data, and performance data from instrumented code, we can infer the frequency at which experimental operators interact with the data analysis workflow (ie. the time spent making changes to the experiment, the time spent interpreting data, and the time spent making inputs/changes to the data analysis software). We present these data in the context of other data analysis workflows running at NERSC.

We hope that these insights provide a basis for future workflow design, performance monitoring and benchmarking of other interactive workflows in the experimental sciences.

Learn more online at: hhttp://www.ipam.ucla.edu/programs/workshops/workshop-iii-complex-scientific-workflows-at-extreme-computational-scales/