Multi-group fairness, loss minimization and indistinguishability

Presenter

April 12, 2022

Abstract

Training a predictor to minimize a loss function fixed in advance is the dominant paradigm in machine learning. However, loss minimization by itself might not guarantee desiderata like fairness and accuracy that one could reasonably expect from a predictor. To remedy this, multi-group fairness notions such as multiaccuracy and multicalibration have been proposed, which require the predictor to share certain statistical properties of the ground truth, even when conditioned on a rich family of subgroups. There is no explicit attempt at loss minimization.

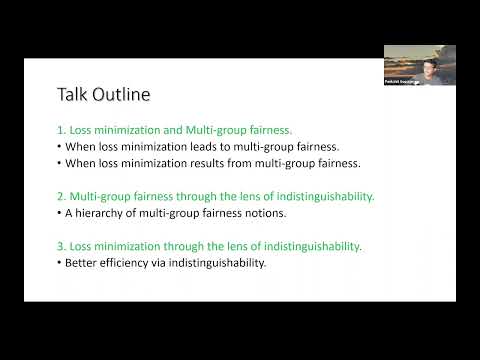

In this talk, we will describe some recently discovered connections between loss minimization and multi-group fairness. We will explore settings where one can lead to the other, and other settings where this is unlikely. We will see how the quest for more efficiently computable notions of multigroup fairness leads to natural analogies with the complexity theoretic notions of indistinguishability and derandomization, where the goal is for our predictor to replicate certain desirable properties of the ground truth.

In doing so, we will introduce some novel notions such as that of an omnipredictor, whose predictions are simultaneously "optimal" for a large class of loss functions and hypothesis, and low-degree multicalibration, which interpolates smoothly between multiaccuracy and multicalibration, and can be viewed as an analog of the notion of bounded independence in pseudorandomness.

This is based on joint works with Adam Kalai, Michael Kim, Omer Reingold, Vatsal Sharan, Mihir Singhal, Udi Wieder and Shengjia Zhao.