Calibrated Inference: Statistical Inference that Accounts for Both Sampling Uncertainty and Distributional Uncertainty

Presenter

March 9, 2022

Keywords:

- stability

- robustness

- replicability

- uncertainty quantification

MSC:

- 62A01

- 62F12

Abstract

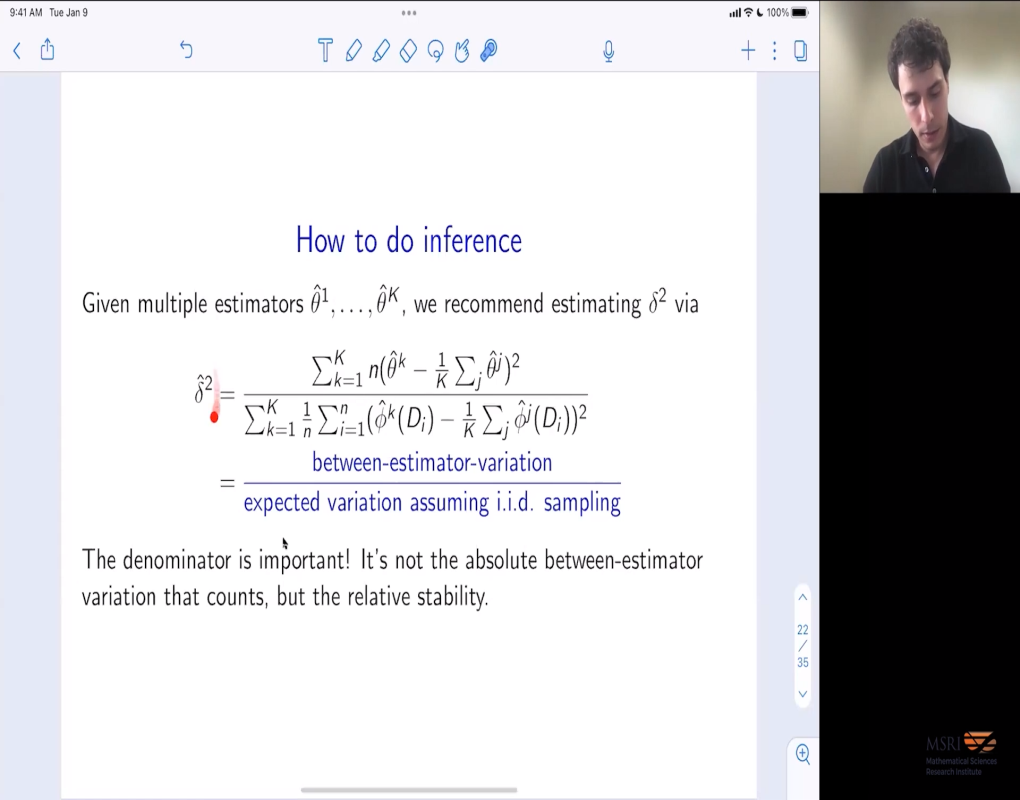

During data analysis, analysts often have to make seemingly arbitrary decisions. For example during data pre-processing, there are a variety of options for dealing with outliers or inferring missing data. Similarly, many specifications and methods can be reasonable to address a certain domain question. This may be seen as a hindrance to reliable inference since conclusions can change depending on the analyst's choices. In this paper, we argue that this situation is an opportunity to construct confidence intervals that account not only for sampling uncertainty but also some type of distributional uncertainty. Distributional uncertainty is closely related to other issues in data analysis, ranging from dependence between observations to selection bias and confounding. We demonstrate the utility of the approach on simulated and real-world data. This is joint work with Yujin Jeong.