Near-Optimal Compression in Near-Linear Time

Presenter

March 8, 2022

Keywords:

- compression

- MCMC

- kernel

- coresets

- maximum mean discrepancy

Abstract

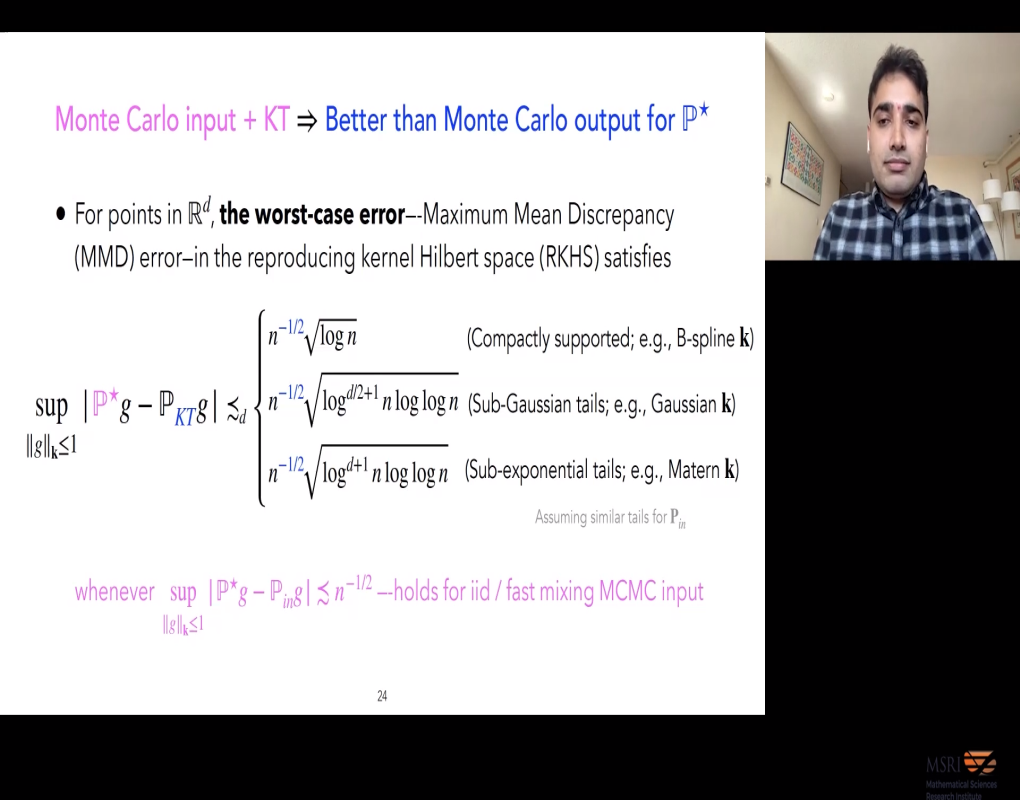

We introduce Kernel Thinning-Compress++ (KT-Compress++), an algorithm based on two new procedures for compressing a distribution P nearly optimally and more effectively than i.i.d. sampling or standard thinning. Given a suitable reproducing kernel k and O(n log^3 n) time, KT-Compress++ compresses an n-point approximation to P into a sqrt(n)-point approximation with better than Monte Carlo integration error rates for functions in the associated reproducing kernel Hilbert space (RKHS). First we show that for any fixed function KT-Compress++ provides dimension-free guarantees for any kernel, any distribution, and any fixed function in the RKHS. Second, we show that with high probability, the maximum discrepancy in integration error is O_d(n^{-1/2} sqrt(log n)) for compactly supported P and O_d(n^{-1/2} (log n)^{(d+1)/2} sqrt(loglog n)) for sub-exponential P on R^d. In contrast, an equal-sized i.i.d. sample from P suffers at least n^{-1/4} integration error. Our sub-exponential guarantees nearly match the known lower bounds for several settings, and while resembling the classical quasi-Monte Carlo error rates for uniform P on [0,1]^d they apply to general distributions on R^d and a wide range of commonly used universal kernels. En route, we introduce a new simple meta-procedure Compress++, that can speed up any thinning algorithm while suffering at most a factor of 4 n error. In particular, Compress++ enjoys a near-linear runtime given any quadratic-time input and reduces the runtime of super-quadratic algorithms by a square-root factor. We also use our results to derive explicit non-asymptotic maximum mean discrepancy bounds for a range of kernels including Gaussian, Matern, Laplace, and B-spline kernels. Finally, we present several vignettes illustrating the practical benefits of KT-Compress++ over i.i.d. sampling and standard Markov chain Monte Carlo thinning with challenging differential equation posteriors in dimensions d = 2 to 100.