Nerve theorems for fixed points of neural networks

Presenter

May 7, 2021

Keywords:

- computational neuroscience

- CTLN

- graph rules

- nerve theorem

MSC:

- 05C20

Abstract

A fundamental question in computational neuroscience is to understand how the network’s connectivity shapes neural activity. A popular framework for modeling neural activity are a class of recurrent neural networks called threshold linear networks (TLNs). A special case of these are combinatorial threshold-linear networks (CTLNs) whose dynamics are completely determined by the structure of a directed graph, thus being an ideal setup in which to study the relationship between connectivity and activity.

Even though nonlinear network dynamics are notoriously difficult to understand, work of Curto, Geneson and Morrison shows that CTLNs are surprisingly tractable mathematically. In particular, for small networks, the fixed points of the network dynamics can often be completely determined via a series of combinatorial {\it graph rules} that can be applied directly to the underlying graph. However, for larger networks, it remains a challenge to understand how the global structure of the network interacts with local properties.

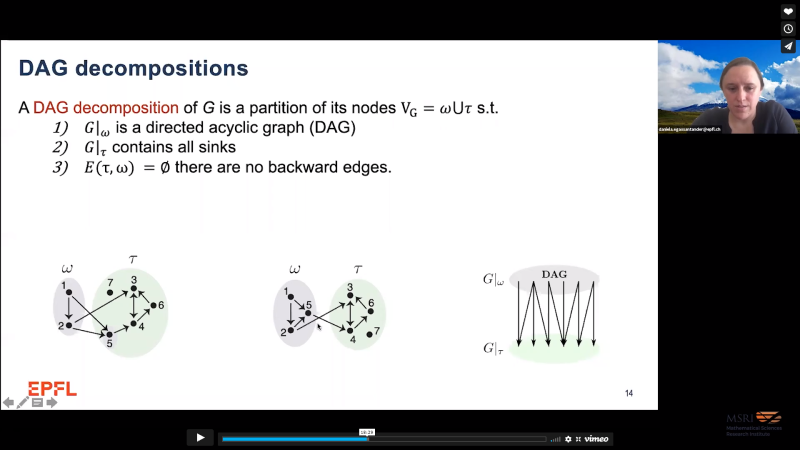

In this talk, we will present a method of covering graphs of CTLNs with a set of smaller {\it directional graphs} that reflect the local flow of activity. The combinatorial structure of the graph cover is captured by the {\it nerve} of the cover. The nerve is a smaller, simpler graph that is more amenable to graphical analysis. We present three “nerve theorems” that provide strong constraints on the fixed points of the underlying network from the structure of the nerve effectively providing a kind of “dimensionality reduction” on the dynamical system of the underlying CTLN. We will illustrate the power of our results with some examples.

This is joint work with F. Burtscher, C. Curto, S. Ebli, K. Morrison, A. Patania, N. Sanderson