Statistical Learning Theory for Modern Machine Learning

Presenter

August 11, 2020

Abstract

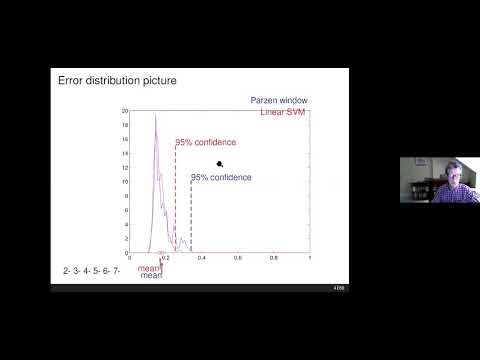

Probably Approximately Correct (PAC) learning has attempted to analyse the generalisation of learning systems within the statistical learning framework. It has been referred to as a ‘worst case’ analysis, but the tools have been extended to analyse cases where benign distributions mean we can still generalise even if worst case bounds suggest we cannot. The talk will cover the PAC-Bayes approach to analysing generalisation that is inspired by Bayesian inference, but leads to a different role for the prior and posterior distributions. We will discuss its application to Support Vector Machines and Deep Neural Networks, including the use of distribution defined priors.