Abstract

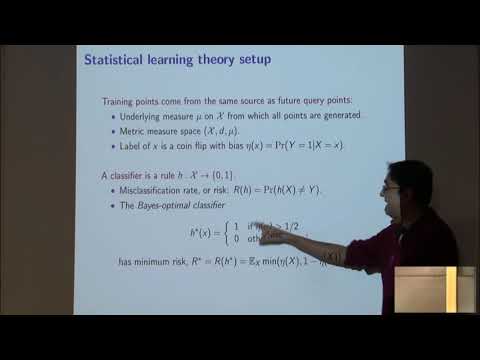

The "nearest neighbor (NN) classifier" labels a new data instance by taking a majority vote over the k most similar instances seen in the past. With an appropriate setting of k, it is capable of modeling arbitrary decision rules. Traditional convergence analysis for nearest neighbor, as well as other nonparametric estimators, has focused on two questions: (1) universal consistency---that is, convergence (as the number of data points goes to infinity) to the best-possible classifier without any conditions on the data-generating distribution---and (2) rates of convergence that are minimax-optimal, assuming that data distribution lies within some standard class of smooth functions. We advance what is known on both these fronts. But we also show how to attain significantly stronger types of results: (3) rates of convergence that are accurate for the specific data distribution, rather than being generic for a smoothness class, and (4) rates that are accurate for the distribution as well as the specific query point. Along the way, we introduce a notion of "margin" for nearest neighbor classification. This is a function m(x) that assigns a positive real number to every point in the input space; and the size of the data set needed for NN (with adaptive choice of k) to predict correctly at x is, roughly, 1/m(x). The statistical background needed for understanding these results is minimal, and will be introduced during the talk. This is joint work with Akshay Balsubramani, Kamalika Chaudhuri, Yoav Freund, and Shay Moran. Â